[Traveling AI Bot] Enjoying Hallucinations

The travel bots bring back photos from various places, subtly capturing the essence of what's there. I prefer the charm of everyday street scenes over well-known tourist spots. Rather than images that highlight the distinct differences of famous landmarks, I appreciate scenes that resemble the views I encounter on my own walks, suggesting that people far away see similar landscapes in their daily lives.

Observations like, "Ah, this is a 90s shopping street," or "This kind of townscape was popular in the 2000s," or "In any area, the arrangement of downtowns, office districts, residential areas, fields, and forests follows this pattern," give a sense of bringing distant places closer to oneself. Therefore, I find that Street View photos, where the background and the character's shadow align nicely, resonate more with me than typical tourist landmark photos (I've been creating and using these first).

Mi's Journey/ Stepping into the Kita Ward of Niigata City, there's a blend of urban vitality and a unique tranquility. The Niigata University of Health and Welfare is where students who will lead the future of the medical world are studying. Next to it is the Garden Cafe Kamonohashi... (Powered by Google Map APIs, ChatGPT-4, CounterfeitXL_β-SDXL, etc.) pic.twitter.com/NJGSnyX0jj

— mi tripBot (@marble_walker) October 26, 2023

The suburban feel of a larger city. A picture with a wide road and an ordinary bus stop. It's primarily a residential area, but there's a school nearby. It seems like a pleasant place for regular cycling. (The full text on Twitter is available on the blog side https://akibakokoubou.jp/mi-runner/)

One day, I was considering a solo trip. I decided to travel from Osaka to Nara and stay at "Hotel Nikko Nara" in the town of Omiya. This hotel retains a traditional appearance while offering Nara miso and sweet chestnuts in the guest rooms, and features a traditional garden... (Powered by Google Map APIs, Stable-LM, etc.)

— mi tripBot (@marble_walker) November 1, 2023

Mei's Journey/ The facility in front of me is "Shizuoka City." Shizuoka City is the capital of Shizuoka Prefecture and has Shizuoka Station, a stop on the Tokaido Shinkansen.

The facility in front of me is "Shizuoka City." Shizuoka City is the capital of Shizuoka Prefecture and has Shizuoka Station, a stop on the Tokaido Shinkansen... (Powered by Google Map APIs, ELYZA-Llama, emi-SDXL, etc.) pic.twitter.com/dHeZBgdmYB

— mei trip Bot (@marble_walker_i) October 23, 2023

But sometimes, they say things that don't make sense...

Hallucinations in Traveling AI Bots

Hallucinations (falsehoods generated by AI) can cause various problems when using AI in business, so companies are eager to suppress them. If an AI logically and coherently asserts incorrect or baseless information in a work context, it can lead to significant confusion.

However, regarding the travel bots, I find that the more pronounced the hallucinations in their text, the more interesting they become.

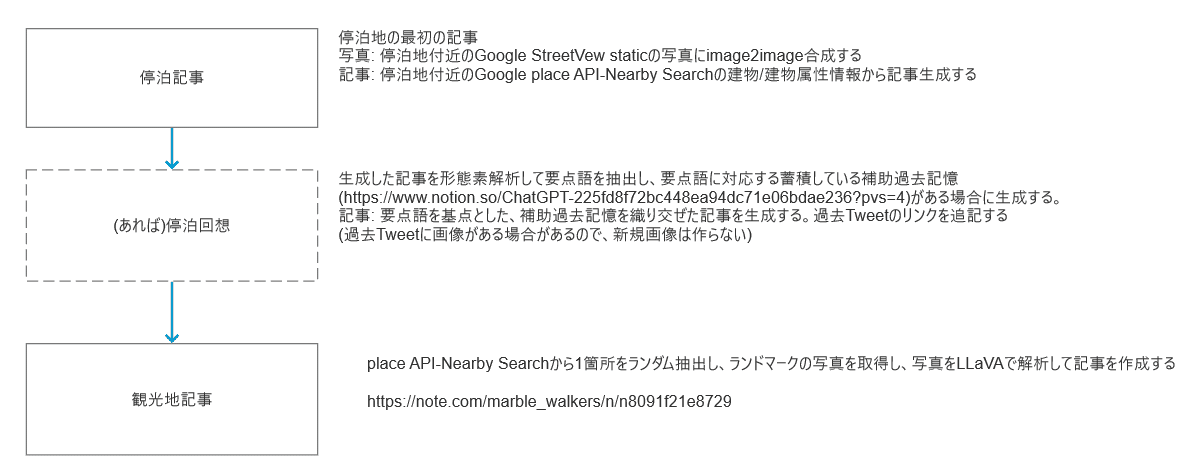

The information inputted into the Large Language Model (LLM) is building data obtained from the Google Places API - Nearby Search (places[n].displayName, types) provided in markdown table format to the LLM. https://developers.google.com/maps/documentation/places/web-service/nearby-search

Therefore, apart from the building names and general attributes (restaurant, government office, retail store, etc.), all other information is hallucinated by the LLM. Descriptive expressions like "on the right...", "the tranquility...", "blue...", "when you taste it..." are all creations of the AI, connecting plausible expressions within the language model it has learned (currently, for tourist photos, we use LLaVA for image analysis, so these descriptive expressions are recognized from the images).

Traveling AI Bots and Multiple LLMs

The travel bots use multiple LLMs. For each stopover, the selection is random, but we try to avoid the same LLM being used consecutively (though it's not strictly prohibited). The proficiency of the writing is clearly led by ChatGPT-4 at this point, as evident from the outputs on X/Twitter.

However, after producing a large number of outputs, I notice that "even ChatGPT-4 has a few writing patterns." Even if the temperature (degree of randomness) is set to the maximum and regional terms are included in the prompt, if the overall structure of the prompt is the same, the generated text tends to have similar parts. This is similar to how, even in human reports, after writing hundreds of travel reports, certain templates emerge.

In human reports, the tone can change depending on whether the text is written upon waking up, after lunch, or in the evening. Therefore, by mixing several LLMs, even if some odd sentences slip in, I think it's enjoyable to view them as individual characteristics. When the LLMs are different, the texts vary significantly, and the nature of the hallucinations also changes considerably. I believe this helps improve the monotony of the writing.

(Note: The prompts that each LLM interprets well vary significantly, so the prompts differ for each LLM. Post-processing is also done to remove commonly generated erroneous sentences (some LLMs tend to start sentences with "Understood"). Since this is not a benchmark, priority is given to obtaining "more plausible outputs.")

Currently, I personally prefer the outputs from ELYZA (https://huggingface.co/elyza/ELYZA-japanese-Llama-2-7b-fast-instruct) for their naturalness, stability, and degree of hallucination. Rinna ([https://huggingface.co/rinna/japanese-gpt-neox-3.6b-instruction-ppo](https://huggingface.co ::contentReference[oaicite:0]

First appeared on 11/03, 2023

https://note.com/marble_walkers/n/ncb7788cbed74

https://akibakokoubou.jp/2023/11/03/[旅するai-bot]-ハルシネーションを楽しむということ/